The cron job that will speed up your Postgres queries 100x

You know how it happens.

You see someone from the Product team coming to your desk and your heart starts to sink. Then the inevitable question comes:

hey, how did the sale go? How many items did we sell compared to last year’s? Is there a chance we could compare on an hourly basis how much we sold compared to any given Friday?

You don’t have a straight answer and you don’t have a quick way to tell them; your transactions table in postgres has grown way too big to handle analytical queries. It could take minutes to even get a {% code-line %}SELECT count(*) FROM transactions{% code-line-end %} going.

We at Tinybird love PostgreSQL. We have been working with it for longer than we can remember and we know its insides and outs better than most; it is our go to RDBMS system for building data intensive applications and the one we recommend to pretty much anyone who asks.

But when your tables start hitting the tens of millions of rows, the hundreds of millions… Yes, you can create or fine tune indices to keep serving your application requests; yes, you can set replication up so that you can at least run the analytical queries against the replicas instead of against the main database; yes, you can resort to running recurring pre-aggregation queries that then dump a reduced (and poorer) set of data somewhere else so that you can exploit it through whatever BI your Product team chose.

But all of that is costly and none of it is ideal. We can offer a way to cut through all that stuff.

Because none of that gives you the ability to use the full power of SQL to run instant queries against all of your data; none of that enables you to retain the flexibility of cutting and slicing ALL of your data any which way you want and to make the most of it.

What if we told you that, with a single cron job, you can be running analytical queries of your large postgres tables in less than it takes you to run a {% code-line %}SELECT count(*){% code-line-end %} against any of them?

Yep, it is possible; only it wouldn’t use Postgres to actually run the analytical queries but Tinybird. This is what the command would look like:

And here is how to setup your crontab to run the job at midnight every night:

You might want to do this every 5 minutes, a little bit more complex but still a piece of cake, you just need to query for the insertion time.

And that’s that… You now have an always-up-to-date replica of your transactions table and can start querying it with sub-second times.

To make this painless, it’d be good to have an index on insertion_date on your Postgres table; in this case we recommend BRIN index which is compact, suited for append-only tables and pretty fast for these use cases. You could use the following command (bear in mind BRIN index only works when data in disk correlates with the order of the data)

Tinybird can ingest records as fast as you can feed them through your network (all the way up to 4Gb/per minute depending on the network speed), so as long as your Postgres db can spit it out, we can take it.

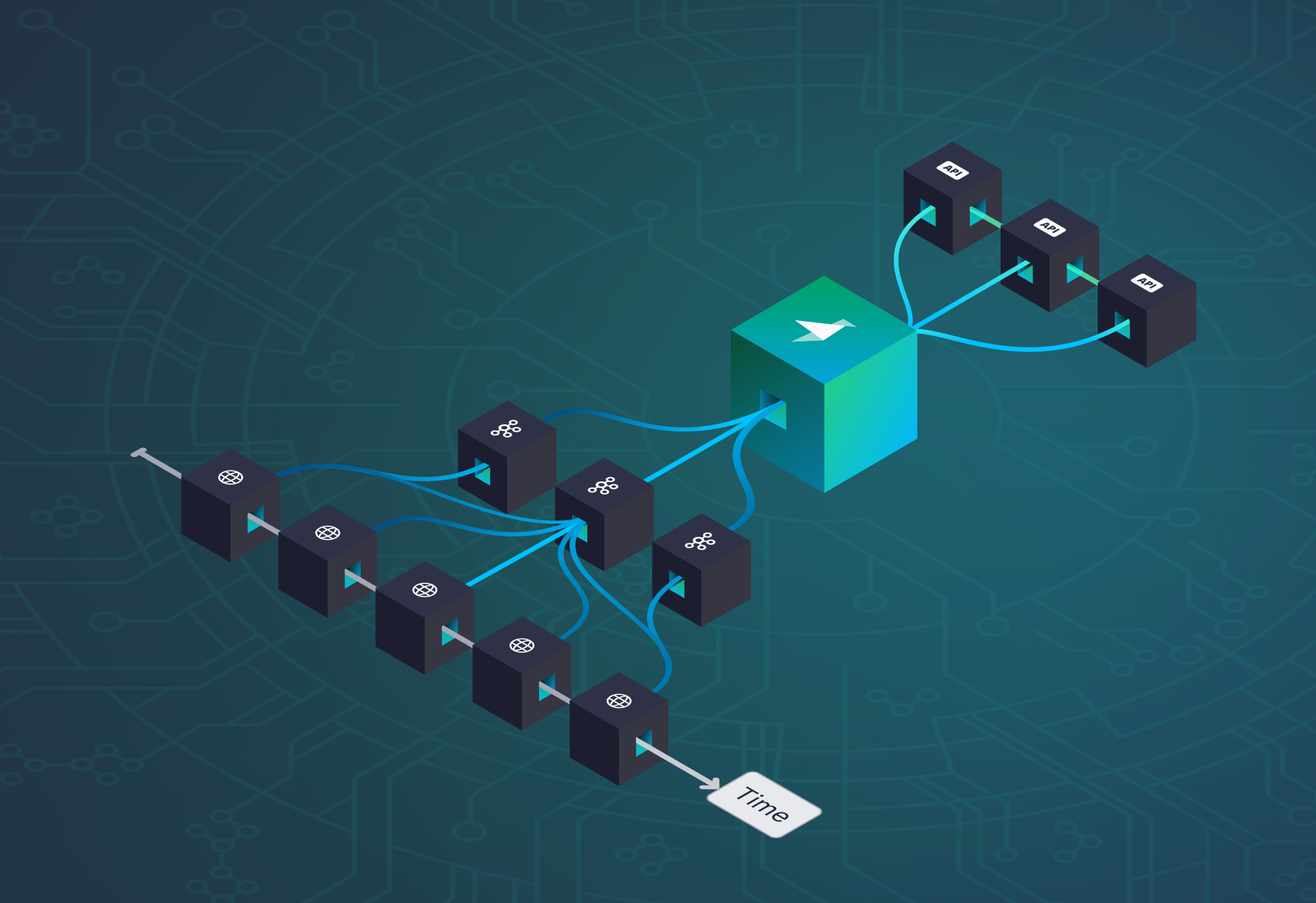

You can then login to Tinybird’s UI and start issuing queries in seconds. Or setup a KPIs dashboard using our APIs.

Oh! And those queries your Product team was asking about? Here’s how fast they run against a 100M rows table.

The upload takes about 5 minutes for these 100M rows (2Gb compressed). Basically is limited by the network and postgres export speed, so it might vary depending on your machine and how close your datacenter is to ours (Google in different regions in our case)

Let’s see some simple examples to see the point where it pays off waiting 5 minutes to upload the whole dataset. All the queries are running PostgreSQL 11.5 with 6 cores per query on a 32Gb machine (16gb shared buffers). The times reported here are the best of many executions.

Count per shipping method

Around 10 times faster.

Orders by day

Around 100 times faster. Let’s get for something more complex.

Avg time for each client to buy again

(You could use neighbor function as well).

In this case the improvement is around 200x.

Just with a couple of these queries, the uploading time to Tinybird pays off even if you upload the dataset every time.

Is your application database growing too big for analysis? You should get in touch. Solving that is fast with Tinybird.