Querying large CSVs online with SQL

Suppose you have a very large CSV file, and you’d like to extract some insights from it. You could use CLI tools like csvkit, clickhouse-local or q, but maybe you don’t want to install another program to run a simple query, or you want a tool that is more visual or interactive.

Using something like Excel is also not an option, as the experience becomes painful once your CSV grows to tens of thousands of rows or more. Some websites will let you upload a larger CSV, but they can be clunky and slow and likely won’t support the upload at a certain file size.

Tinybird lets you…

- Upload CSVs in seconds from your computer or a remote URL (e.g. S3 bucket, Dropbox, Google Cloud Storage, etc...).

- Write SQL queries in your browser and performing compute-intensive operations like joins and aggregations on the data in milliseconds (it uses ClickHouse under the hood).

- And share the results with other people via snapshots or dynamic API endpoints.

Querying a CSV file online with SQL

Let’s take, for example, this repo containing Crunchbase data about startup investments from 2015 available in a nice and clean CSV format. Particularly, we'll use the investments.csv file and make some queries on it.

As you can see from the above screencast, it takes less than a minute to upload and query a CSV using SQL in Tinybird.

Want to try this yourself? Follow these steps:

- Create a free Tinybird account here.

- Navigate to your Tinybird dashboard and click "Add Data Source".

- Choose "Remote URL" as your Data Source (or if you're using a local file, select "File Upload".

- Copy the URL of the CSV file and paste it into the Tinybird UI. Click "Add".

- Tinybird will analyze the file and automatically infer the column data types. Modify your schema if you'd like and click “Create Data Source”. Tinybird will ingest the CSV file into a Data Source table.

- Click “Create Pipe” too start building your SQL queries. You can use Tinybird Pipes to break your queries into smaller, simpler nodes. Each subsequent node can query over prior nodes. This keeps things clean and simple and avoids nested CTEs and the like.

{%tip-box title="Note"%} Tinybird also supports ingestion of NDJSON and Parquet files.{%tip-box-end%}

Here’s the query I made in the video in case you want to use it as a starting point.

Joining two CSV files

The previous dataset doesn’t contain specific data about countries. So if you wanted to know, for example, the ratio of startup funding received for a country versus the population of of that country, you’d have to join the data from the file you just uploaded with another one like this.

Here we get the latest data available for each country:

And here we join the investments data with the country data:

{%tip-box title="Note"%}You will need to give each node an alias when you join them.{%tip-box-end%}

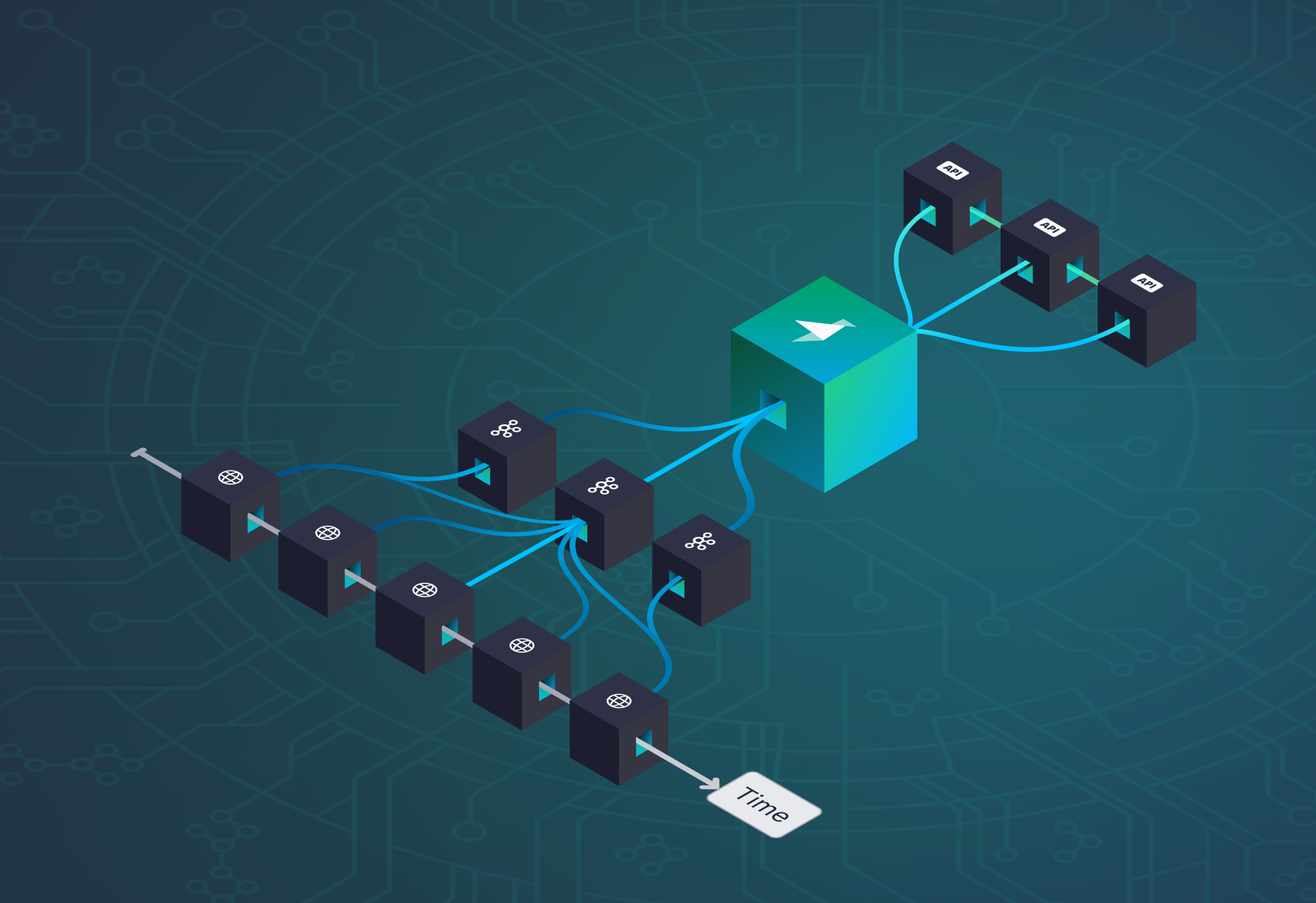

Creating API endpoints from CSV data

Tinybird makes it easy to publish the results of your SQL queries as HTTP endpoints in a click, with options for returning the data as CSV, JSON, NDSJON, or Parquet. Just click “Create API Endpoint”, select the node you want to publish, and you’ll have your endpoint.

If you're new to Tinybird, you can sign up for free. The Tinybird Build Plan is free forever, with no time limit and no credit card required. You can store up to 10 GB of data and query your CSVs as much as you want. Once you publish APIs from your queries, the Build Plan includes up to 1,000 requests per day free of charge.